You know the face but the name escapes you... The last time you saw her she told you something important, but you don't know what it was... He's out of context and you're hoping for a clue where he's from.

That name at the ready. Your connections to hand. Unlock your superpower. Get Intro on the App Store.

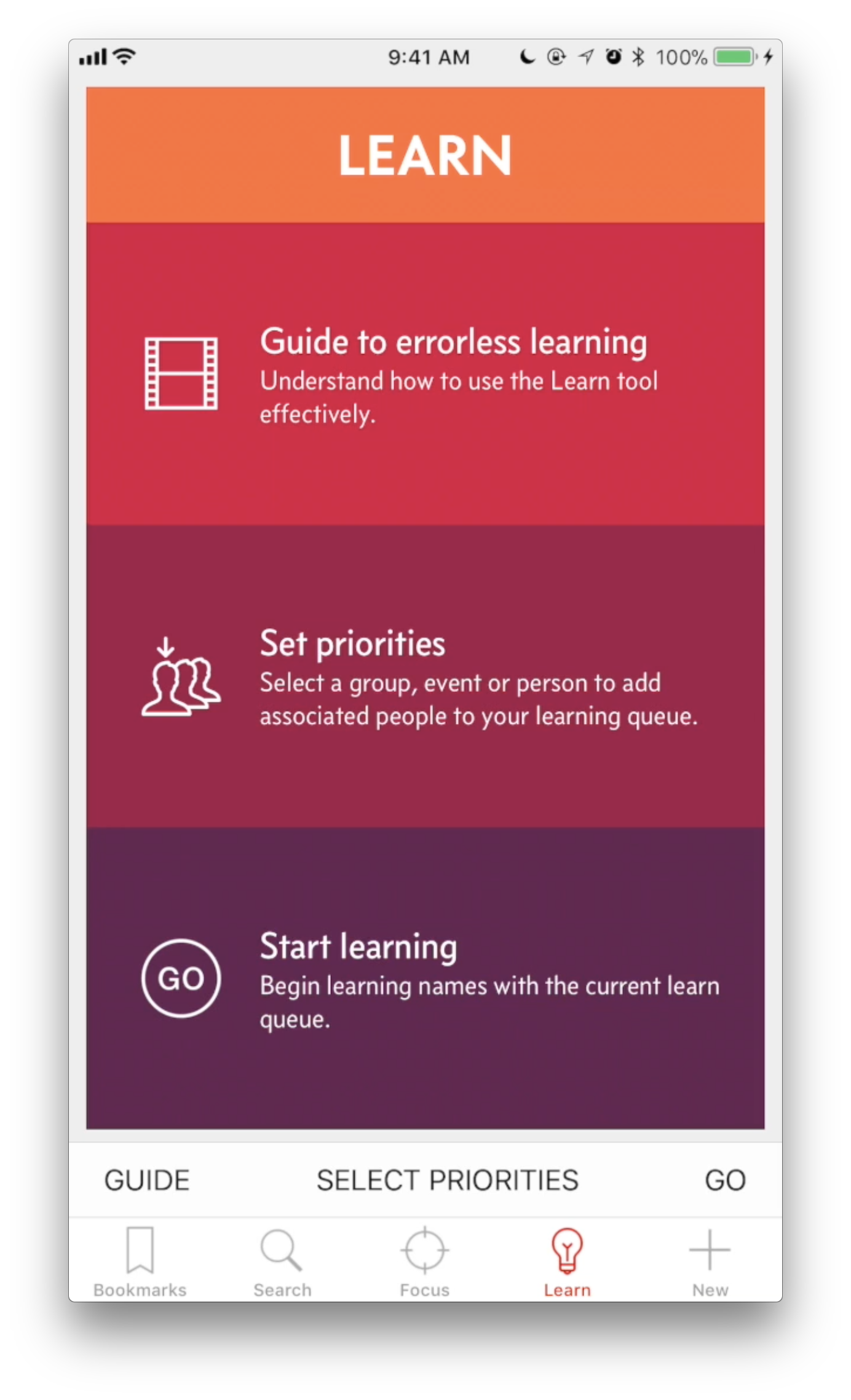

Learn

Maybe in life, you learn from your mistakes. But in learning names, errors undermine recall.

Intro provides a face/voice and name learning tool. Start with your entire personal network, or focus your learning on a person, group, or context. Either way, you'll have a deceptively simple learning experience that uses errorless learning techniques with spaced retrieval. Basically, learning that understands how your brain is wired.

You will learn names, and you will remember them. You will be amazed. Honestly, it's like unlocking a superpower.

(No endorsement is implied from the people pictured in the Learn video, nor the organisations they represent.)

Connect.

Intro can be populated with your data from the iPhone Contacts, and keep the Contacts app up to date. (Or not, your choice.)

Update contact details, sure. But Intro does far more. Easily re-order details to put the most important things first, on a per-contact basis. Merge duplicate contacts into a single entry.

Try doing either of those in Contacts... you can't. Yet your Intro will update Contacts with these changes too.

And of course, you can start calls and messages right here. You may never need to go back to Contacts again.

Pull any thread.

But there's more to people than just phone numbers & email.

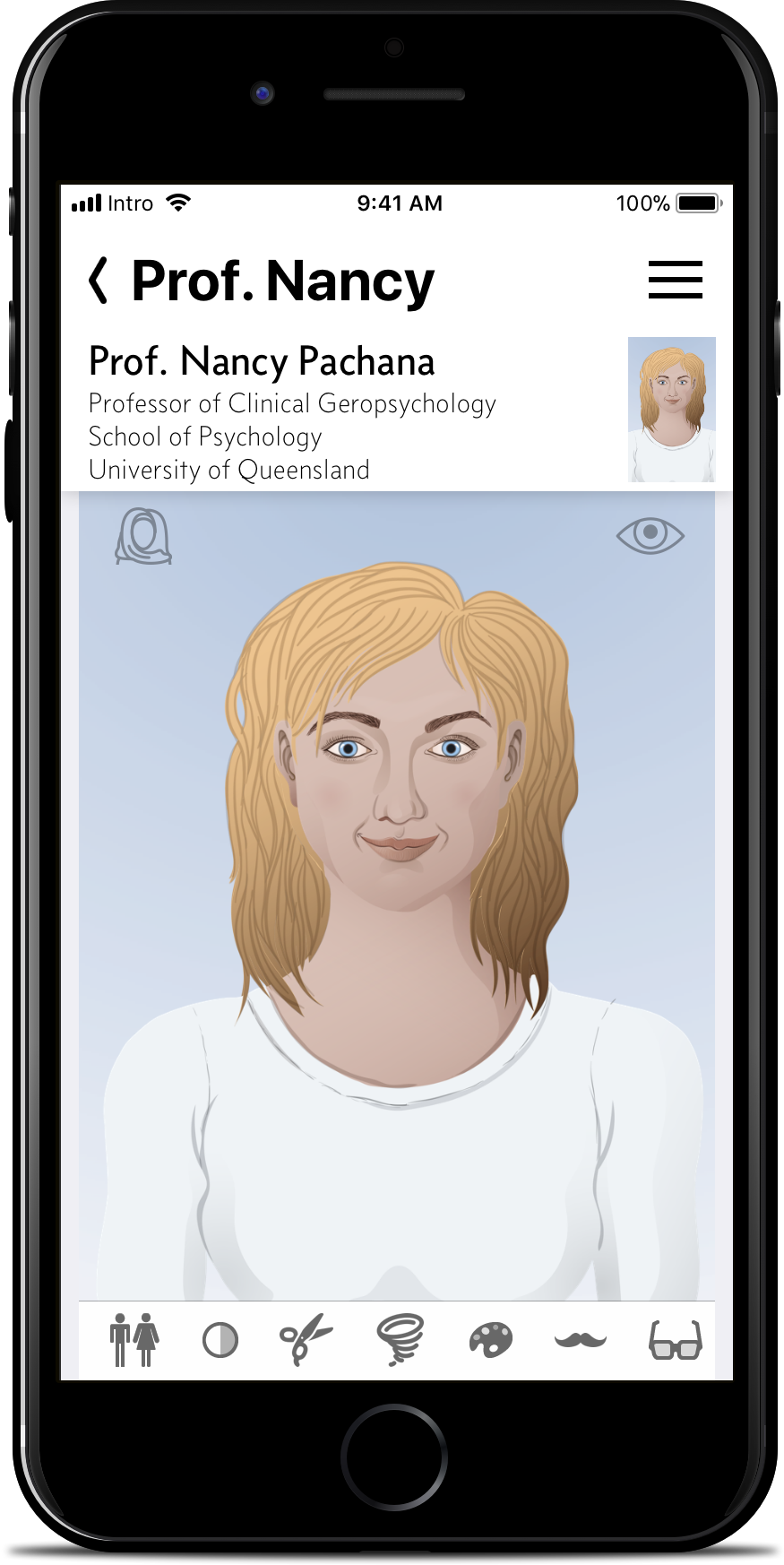

Dial up an avatar to tag a person with a range of physical attributes. (Coming soon: tag a person with a vocal profile if that's your thing.)

You can then dial these up in search. So next time you see that blonde woman in the supermarket, you'll quickly find who you're talking to, even if you've drawn a blank on both the name and where she's from.

Give voice.

Coming soon: A voice can paint 1,000 pictures. Create a vocal profile of your contacts, from gender, to accent, confidence, the language complexity they use, their height, and other notes.

You can search on these and other criteria later to find someone again—whether they're in current earshot or you're just hearing them again in your own mental replay.

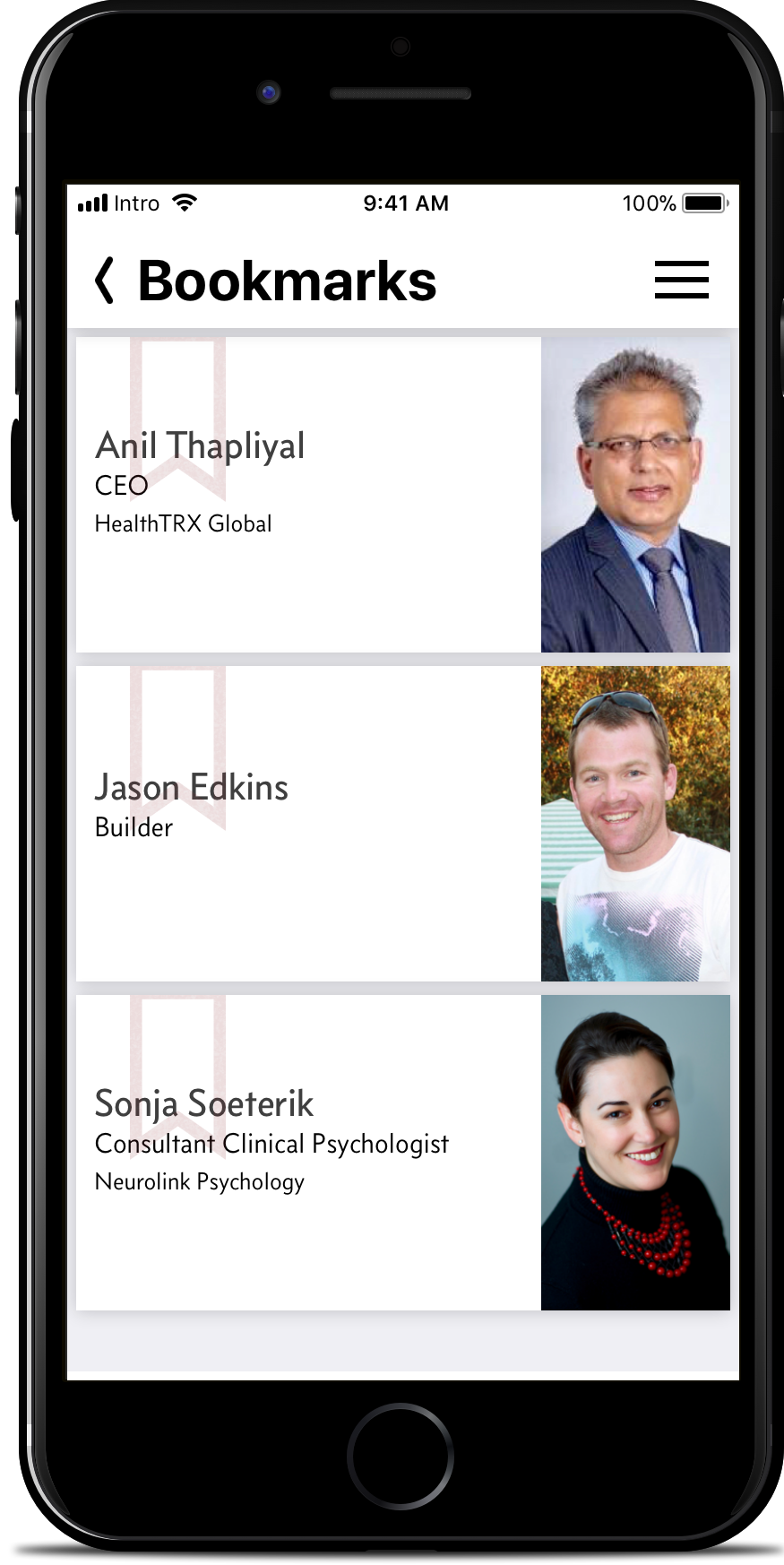

Bookmark.

Swiping, tapping, and searching are all well and good when you need them. But wouldn't it be better to just have the info at a glance?

Bookmark a person as a priority and they’ll be in the first list you see as soon as the app launches.

So whatever your day holds you'll have the names you most need right to hand—for your key appointments, and for that person you just met at the party.

“How secure are my servers? I don’t even have a server. Your data stays on your device. Period. This isn’t a social network, and it’s not a communication platform. The Intro app isn’t about you sharing your personal network with me, or with anyone else. It’s a completely private tool you use, for your own benefit.”

There's even more to Intro than shown here. See an overview of your relationship with each person, and quickly add additional information and context. Link People, Groups, and Events, with associated notes, to let you access connections and relationships in context. And more. Sign up now, and get early access to Intro in the near future to experience the full app.